Create an emotion game using cards

Goal: learn how to detect images using QTrobot image recognition

Requirements:

In this tutorial, we implement a simple card game using QTrobot Studio and QTrobot image recognition software. A person shows a card with emotion image to the QTrobot and the robot recognize the image and explain it to the user. Here is a short video of the scenario:

How does the QTrobot's image recognition work?

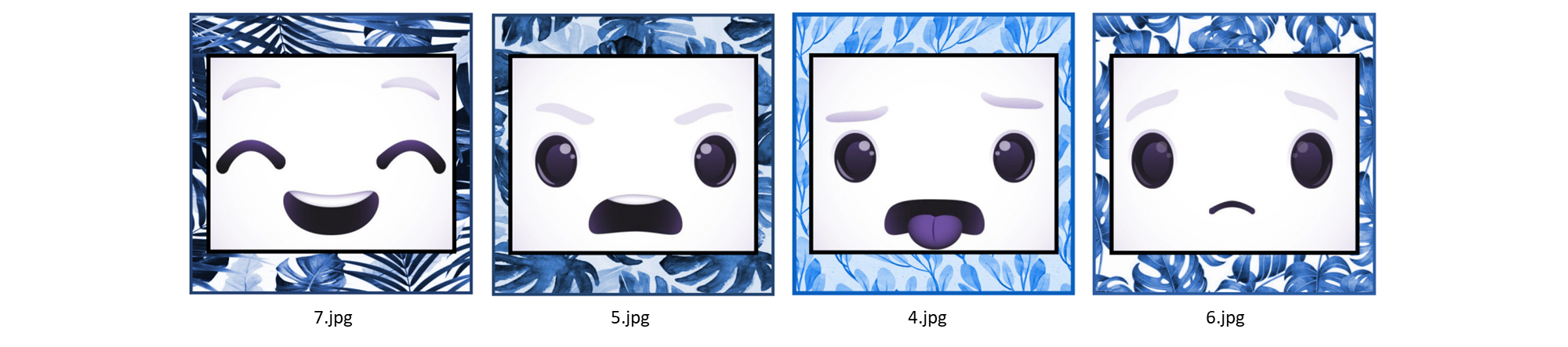

Now let’s see how we can easily implement our scenario in QTrobot Studio. There is ROS image recognition module called find_object_2d which is running on the robot. Using QTrobot's camera, the find_object_2dsimply detect whatever images that are in ~/robot/data/images folder on QTPC of QTrobot. For this tutorial, we are using the following images. These images already exist in the corresponding folder to be recognized by find_object_2d.

If we show one of the above card to QTrobot, the find_object_2drecognize it and publishes the corresponding id (number in the filename) along with other information to /find_object/objects topic. The topic use a message of standard type std_msgs/Float32MultiArray. The first element in the list is the id of the image which is of our interest for this scenario. For example, if we show the angry face image (i.e 5.jpg), the following message will be published. The first element in data field (5.0) is the id of the 5.jpg image.

layout:

dim: []

data_offset: 0

data: [5.0, 553.0, 553.0, 0.582885205745697, -0.03982475772500038, ...

---

Implementation

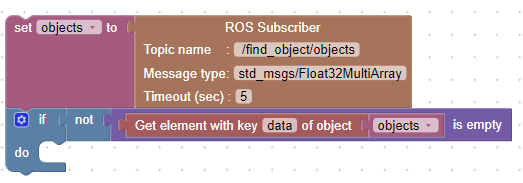

Let's see how we can implement the above scenario using image recognition and QTrobot Studio. First we read a message from /find_object/objects topic using ROS Subscriber block and check if the message is not empty. Then we read the image id from the first element in the data. Depends on the recognized image id, we asks robot to explain the emotion using speech feedback emotion and gestures.

1. Read the detected image

We can use the ROS Subscriber block to read the message published by find_object_2d as it shown here:

find_object_2d continuously publishes messages independently whether any image detected or not. Therefore, we need to check if actually any image is detected by checking the data field of our message. if the data list is not empty, we have a detected image.

2. Extract the image ID

As we explained above, we are interested in the first element of items in data list. The following blocks, simply extract the first element (which is the id of the image) and store it in emotion_id variable:

3. React to the detected emotion

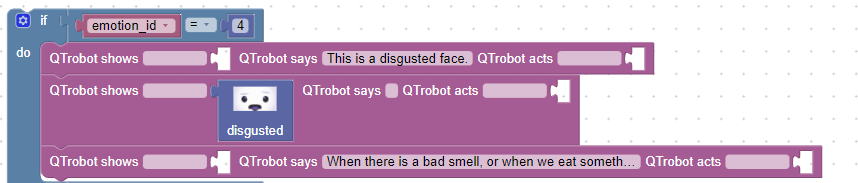

From our image cards shown above, we can now associate the emotion_id to the actual emotion and asks QTrobot to react to that emotion. We use the standard Condition block to check and react to the emotion id as shown bellow:

For example the emotion id 4 is related to disgusted emotion. We first says what we have recognized then, we show the disgusted emotion and explain it a bit more using another Show, says and act block.

4. Put it all together

Now that we know how to detect and react to an emotion, we can repeat the previous steps and expand our scenario for other emotions.

We also adds some introductory messages at the beginning to explain our game to the user. To make our game to run more times, we need to wrap all blocks by LuxAI repeat until I press stop block. This repeat blocks keeps our game running until we stop it using Educator Tablet.