Expressive QTrobot: Realistic Lip Sync, Gaze Control & Face Customization

The Expressive QTrobot update introduces a new level of interactive capabilities, enhancing the robot's ability to communicate and engage with users more naturally and dynamically. This software update brings to life realistic lip synchronization, customizable facial features, and precise gaze control, allowing QTrobot to exhibit human-like expressions and behaviors. With these new features, QTrobot becomes an even more versatile and engaging platform, capable of delivering a richer, more personalized interaction experience that is not only visually captivating but also highly adaptable to different use cases and environments.

|  |

|---|---|

| Watch the QTrobot Speech Lip-Sync Demonstration Video | Watch the QTrobot Customized Face Video |

Features

-

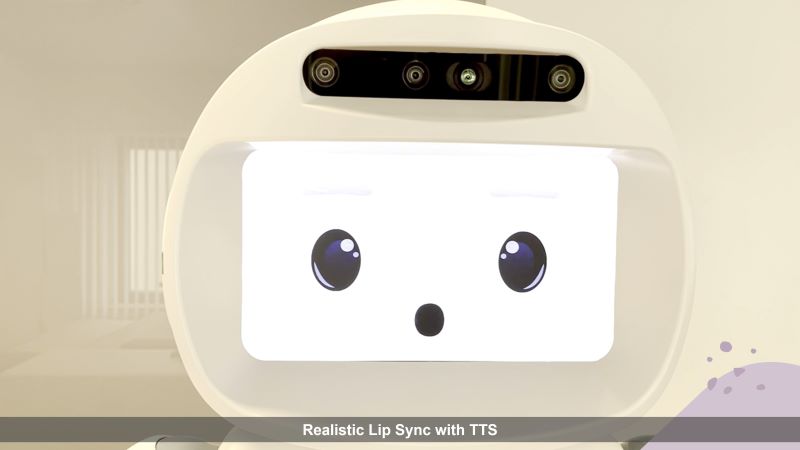

Realistic Lip Sync with TTS: QTrobot now has the ability to synchronize its lip movements with the generated speech in real-time. Utilizing viseme IDs provided by TTS engine, the robot matches its mouth movements closely with the sounds it produces, resulting in a highly realistic and expressive speaking experience. This enhanced lip-sync feature adds depth and life to QTrobot's interactions, making communication more intuitive and engaging.

-

Eyes Gaze Control: With the new gaze control functionality, users can independently control the position and movement of QTrobot's eyes via a simple API. This feature offers precise control over where and how the robot focuses its gaze, enabling more natural interactions that respond to the user's actions. The ability to set each eye's position individually opens up opportunities for creating varied expressions, adding a unique layer of expressiveness to QTrobot's personality.

-

Customizable Facial Expressions: Expressive QTrobot allows users to create their own custom face designs. Users can seamlessly introduce new faces by providing simple images for the background face, eyes, and viseme mouth positions. The robot integrates these custom faces with the existing APIs, enabling smooth lip sync, gaze control, and natural behaviors like blinking. This customization feature empowers users to personalize QTrobot's appearance, making it suitable for different settings or themes.

These features make QTrobot more expressive, adaptable, and capable of delivering an enhanced interactive experience. Whether for education, therapy, or entertainment, the Expressive QTrobot software update provides a new way to create meaningful connections with users.

Installation

Installing the new feature is simple. Just follow these steps to run the qt_robot_interface_update on QTRP:

-

Access QTRP via SSH: From the Ubuntu desktop of QTPC, open a terminal and connect to QTRP using SSH:

ssh developer@QTRP -

Download and Install the Update: Download and run the

qt_robot_interface_updated_2_0_0.runfile withsudo:cd /tmp

wget https://github.com/luxai-qtrobot/software/releases/download/v2.0.0/qt_robot_interface_updated_2_0_0.run

chmod +x qt_robot_interface_updated_2_0_0.run

sudo ./qt_robot_interface_updated_2_0_0.run

Verify that there are no error messages to confirm that the installation was successful.

Usage

Using new lip sync feature

To use the lip sync functionality, simply ask QTrobot to speak via the /qt_robot/behavior/talkText interface. QTrobot will automatically synchronize

its mouth movements with the text being spoken.

Using a publisher:

rostopic pub /qt_robot/behavior/talkText std_msgs/String "data: 'Hello. My name is Q T robot.'"

Useing a service call:

rosservice call /qt_robot/behavior/talkText "message: 'Hello. My name is Q T robot,'"

Using eyes gaze interface

This update introduces a new ROS service interface, /qt_robot/emotion/look, to control the eye positions on QTrobot's face. The service takes three parameters:

eye_l: The displacement (x, y) of the left eye relative to its default center position.

eye_r: The displacement (x, y) of the right eye relative to its default center position.

duration: An optional parameter specifying how long the eyes should remain in the displaced position before returning to the default position. The default value is 0, meaning the eyes will stay in the new position until another command is issued.

Example: To move the eyes to look left for 5 seconds, use the following rosservice command:

rosservice call /qt_robot/emotion/look "eye_l: [30, 0]

eye_r: [30, 0]

duration: 5.0"

Alternatively, you can achieve this in your Python code:

from qt_robot_interface.srv import emotion_look

look = rospy.ServiceProxy('/qt_robot/emotion/look', emotion_look)

look([30, 0], [30, 0], 5.0)

Customization

You can customize QTrobot's face and behaviors to adapt to different scenarios, themes, or interaction needs. This section covers how to modify QTrobots facial appearance, including the background, eyes, and viseme mouth shapes, allowing you to create unique expressions and adapt to events like a Halloween party.

We'll also explain how to adjust key configuration parameters in qt_robot_interface to control QTrobot's eye positions, blinking intervals, and lip synchronization. These customizations enable you to create a more engaging and tailored interaction experience with QTrobot.

Customizing QTrobot's face

You can customize QTrobot's face by modifying the default facial appearance to adapt to different scenarios and themes or by introducing an entirely new face.

First, let's understand the components of QTrobot's facial visuals and how they are rendered.

QTrobot's face consists of the following components:

- Background face: A background image (face.png) that serves as the base for the face.

- Eyes: Separate images for the left and right eyes (eye_l.png and eye_r.png) that overlay the background face.

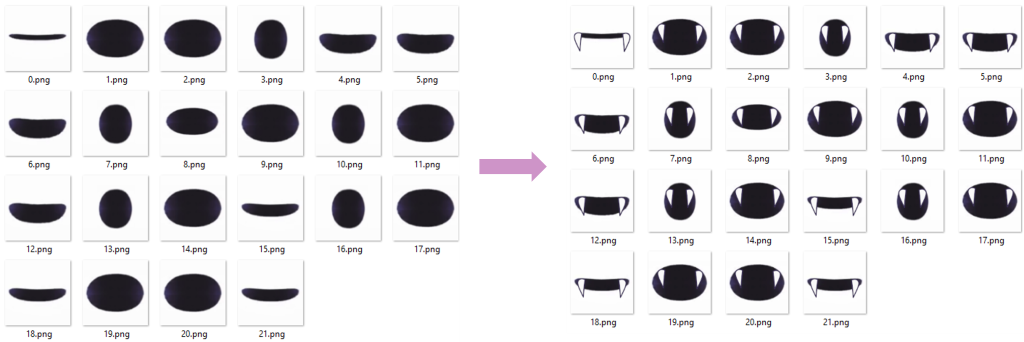

- Viseme mouth shapes: A set of 21 mouth shapes corresponding to viseme IDs (0.png to 21.png).

The background and eyes images are stored in a specific folder, and the path to this folder is set using the idle_path parameter in the qt_robot_interface configuration file (e.g., qtrobot-interface.yaml).

When qt_robot_interface starts, it loads the background and eye images from this idle_path and overlays the eyes onto the background face to animate the final face.

The positions of the left and right eyes are specified by the left_eye_pos and right_eye_pos parameters in the configuration file.

QTrobot also animates the blinking behavior, with the minimum and maximum blinking intervals defined by blink_interval_min and blink_interval_max.

Additionally, during startup, qt_robot_interface loads all the viseme images from the path specified by the viseme_path parameter.

When the /qt_robot/behavior/TalkText interface is used to make QTrobot speak, the corresponding viseme mouth shape is overlaid on the background face,

synchronized with the audio playback. The position where the viseme mouth shapes are rendered is specified by the mouth_pos parameter in the configuration file.

- All images must be placed in subfolders within the default emotion path of QTrobot. The default emotion data path is

/home/qtrobot/robot/data/emotionson QTRP. - In the current version of qt_robot_interface, all images must be in .png format. However, the alpha channel is not used; instead, pure black pixels (0, 0, 0) in the eyes or viseme images are treated as transparent when overlaid on the background. Therefore, any pure black pixels will not appear in the final render.

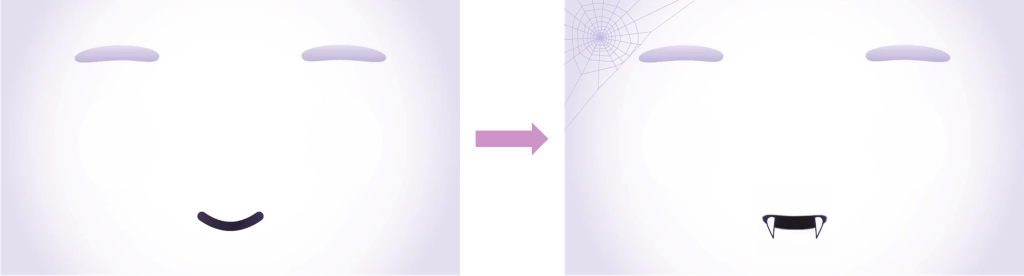

Example of Face Customization

Imagine Halloween is approaching, and QTrobot is attending a Halloween costume party. You want to give QTrobot some makeup and vampire teeth to fit the theme. Here's how you can customize the face:

-

Create a working folder on QTPC (or your own laptop) and copy the default images:

mkdir ~/costume_party # Create a working folder on QTPC

ssh developer@qtrp # SSH into QTRP

su qtrobot # Switch to qtrobot user

scp -r ~/robot/data/emotions/QT/idle qtpc:/home/qtrobot/costume_party # Copy the default idle face images to your working folder

scp -r ~/robot/data/emotions/QT/viseme qtpc:/home/qtrobot/costume_party # Copy the viseme images

exit; exit # Exit QTRP -

Edit the default

~/costume_party/idle/face.pngin your working folder to add the makeup and vampire teeth; You can use GIMP or any other photo editor of your choice:

- Optionally, edit the viseme images in your working folder (

~/costume_party/viseme/) to add vampire teeth:

-

Copy the edited images back to QTRP under the appropriate folder within the default emotion data path:

ssh developer@qtrp # SSH into QTRP

su qtrobot # Switch to qtrobot user

scp -r qtpc:/home/qtrobot/costume_party ~/robot/data/emotions/ # Copy your edited images to the emotion data path on QTRP -

Update the parameters in the configuration file (e.g.,

qtrobot-interface.yaml) on QTRP to use the new facial images:sudo nano /opt/ros/noetic/share/qt_robot_interface/config/qtrobot-interface.yamlUpdate the parameters as follows:

qt_robot_interface:

emotion:

idle_path: 'costume_party/idle' # Path to the folder containing idle face images (background face, left and right eyes)

viseme_path: 'costume_party/viseme' # Path to the folder containing viseme mouth images -

Restart the qt_robot_interface service on QTRP to apply the changes:

sudo systemctl restart qt_robot_interface.serviceIf qt_robot_interface fails to start, check the log files on QTRP to identify the issue:

journalctl -u qt_robot_interface.service

Customizing qt_robot_interface Configuration to Suit Your Needs

The qt_robot_interface configuration file located at /opt/ros/noetic/share/qt_robot_interface/config/qtrobot-interface.yaml, allows you to tailor various aspects of QTrobot's facial expressions, gaze behavior, and lip synchronization.

Below is an explanation of each parameter and how you can customize them:

-

startup_emotion: This parameter lets you set a short video (e.g., 'QT/yawn.avi') to be displayed when the robot wakes up. This can be customized to any video that you want QTrobot to show during startup, enhancing the wake-up experience. -

idle_path: This defines the path to a folder containing your idle face images, including the background face, left eye, and right eye. -

viseme_path: Specifies the path to the folder containing viseme mouth images used for lip synchronization. By providing custom viseme images, you can personalize the mouth movements to better match different speech styles or languages. -

right_eye_posandleft_eye_pos: These parameters set the neutral (default) positions of the right and left eyes, respectively. You can adjust these values to define where the eyes should naturally rest, enabling you to fine-tune the gaze behavior to match the robot's face design. -

mouth_pos: This sets the neutral position of the mouth. Adjusting this value ensures that the mouth aligns correctly with the face design, especially if you've customized the face or viseme images. -

blink_interval_minandblink_interval_max: These parameters control the frequency of the robot's blinking, specifying the minimum and maximum time (in seconds) between blinks.

By modifying these parameters, you can fully customize QTrobot's facial appearance, lip sync, and gaze movements, creating a more engaging and tailored interaction experience.

qt_robot_interface:

emotion:

startup_emotion: 'QT/yawn.avi' # Optional: Set a short video to be displayed when the robot wakes up

idle_path: 'QT/idle' # Path to the folder containing idle face images (background face, left and right eyes)

viseme_path: 'QT/viseme' # Path to the folder containing viseme mouth images

right_eye_pos: [140, 168] # Neutral position of the right eye (x, y coordinates)

left_eye_pos: [532, 168] # Neutral position of the left eye (x, y coordinates)

mouth_pos: [336, 320] # Neutral position of the mouth (x, y coordinates)

blink_interval_min: 5 # Minimum interval (in seconds) between blinks

blink_interval_max: 8 # Maximum interval (in seconds) between blinks

Copyright

© 2024 LuxAI S.A. All rights reserved.